You are currently browsing the tag archive for the ‘SAS’ tag.

I recently attended the SAS Analyst Summit in Steamboat Springs, Colo. (Twitter Hashtag #SASSB) The event offers an occasion for the company to discuss its direction and to assess its strengths and potential weaknesses. SAS is privately held, so customers and prospects cannot subject its performance to the same level of scrutiny as public companies, and thus events like this one provide a valuable source of additional information.

SAS has been a dominant player in the analytics marketplace for years, celebrating its 40th anniversary this year and reporting US$3.16 billion in 2015 revenue. Both of these are significant accomplishments in the software market. And while validation of vendors – including the company’s viability – often ranks as the least important of the seven product evaluation criteria in our benchmark research, SAS’s ranks as one of the most commonly used tools for predictive analytics: One-third (33%) of participants in our predictive analytics benchmark research use it.

The company provides a very broad technology stack including information management, business intelligence, and many types of analytics and visualization. In addition, SAS offers domain-specific applications built on top of these capabilities. A quick count on the products and solutions page on its website shows hundreds of entries, and SAS executives at the event asserted that the company will generate more than 100 releases this year. So one challenge for customers, as with any other large vendor, is navigating through this maze to find something that suits their needs. Also a vendor with a large portfolio is generally not as nimble as one with fewer products. On the other hand a large vendor can more easily manage the interdependencies between products for its customers. That is, if an organization licenses a variety of products from multiple vendors it often falls to the buyer to keep different versions from different vendors in synch.

At this year’s event, big data was much less prominent on the agenda than in the past. Over the last several years SAS has made significant investments in big data, supporting Hadoop and in-memory processing to create a scalable, high-performance infrastructure. This year it focused on end-user tools and prebuilt applications for working with data and analytics. In particular, SAS presenters identified three areas of focus in its technology investments: analytics, data management and visualization.

My colleague Mark Smith has written about the importance of visual discovery. SAS delivers visualization capabilities through its Visual Analytics product line, in which it continues to invest. A speaker claimed that more than 14,000 servers were licensed to run Visual Analytics as of 2015, up from 8,400 in 2014. SAS will be combining the capabilities of Visual Explorer and Visual Designer to create a more unified user experience. The company also is introducing visual data preparation features that enable users to explore and profile data as part of the data integration and transformation process.

No analysis should overlook SAS’s core competency of analytics. I was impressed with its demonstrations of automated evaluation and selection of different predictive analytics algorithms as part of its visualization capabilities. Visual Analytics offers a modern, intuitive user interface for analyzing big data set, but it has only limited collaboration capabilities. Our big data analytics benchmark research finds more than three in four respondents (78%) consider collaboration as important or very important. SAS will need to make further investments to support collaboration.

SAS continues to advance another product, Visual Investigator, which it plans to bring to market more broadly in 2016. Announced last year as part of the Security Intelligence products, it is targeted at what SAS calls the “intelligence analyst,” a role between business analyst and data scientist. This tool has great potential as a standalone product as it combines critical components of tasks, activities, cases, and taking action that have broad appeal across all analytic roles and beyond fraud and security investigations.

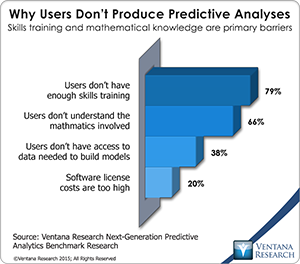

At the event, several analysts questioned SAS executives about the impact of open source systems on the company. This was a hot topic generating much discussion. Executives acknowledged that the company has lost ground to open source systems in the educational market and in response has reinvigorated its efforts there. The SAS University Edition is available for download and on Amazon Web Services. It is also available in a cloud-based offering called SAS On Demand for Academics. Dozens of universities that offer masters of analytics programs are working with SAS to help address the shortage of skilled analytics resources. Our Next Generation Predictive Analytics research shows that lack of skills training and knowledge of the mathematics involved in predictive analytics are significant obstacles to users producing their own analyses, cited by 79% and 66% of participants respectively. It is wise for SAS to invest in supporting these programs. If your organization wants to hire or train additional resources, these programs may be a valuable resource.

there. The SAS University Edition is available for download and on Amazon Web Services. It is also available in a cloud-based offering called SAS On Demand for Academics. Dozens of universities that offer masters of analytics programs are working with SAS to help address the shortage of skilled analytics resources. Our Next Generation Predictive Analytics research shows that lack of skills training and knowledge of the mathematics involved in predictive analytics are significant obstacles to users producing their own analyses, cited by 79% and 66% of participants respectively. It is wise for SAS to invest in supporting these programs. If your organization wants to hire or train additional resources, these programs may be a valuable resource.

On the technology front, executives pointed out that SAS has embraced open source systems, with support for Linux, Hadoop and the Python and Lua programming languages. However, it does not plan to support R, which is used by more than half (58%) of participants in our predictive analytics research . The company also plans to introduce a set of open application programming interfaces (APIs) to encourage more developers to work with SAS and create a marketplace of third-party products. The key will be whether the collection of these efforts makes a significant impact on the developer community, which is where open source tools often gain their foothold in organizations. At a minimum I expect SAS will need to offer a “freemium” version of products if it really wants to win over the development community.

This event provides a valuable window into SAS’s performance and strategy. The company has proven its staying power and ability to be a relatively fast follower of industry trends to remain competitive in the constantly evolving business intelligence and analytics landscape. For organizations that consider expanding their use of analytics, I recommend placing SAS on the list of vendors they evaluate.

Regards,

David Menninger

SVP & Research Director

I want to share my observations from the recent annual SAS analyst briefing. SAS is a huge software company with a unique culture and a history of success. Being privately held SAS is not required to make the same financial disclosures as publicly held organizations, it released enough information to suggest another successful year, with more than $2.7 billion in revenue and 10 percent growth in its core analytics and data management businesses. Smaller segments showed even higher growth rates. With only selective information disclosed, it’s hard to dissect the numbers to spot specific areas of weakness, but the top-line figures suggest SAS is in good health.

One of the impressive figures SAS chooses to disclose is its investment in research and development, which at 24 percent of total revenue is a significant amount. Based on presentations at the analyst event, it appears a large amount of this investment is being directed toward big data and cloud computing. At last year’s event SAS unveiled plans for big data, and much of the focus at this year’s event was on the company’s current capabilities, which consist of high-performance computing and analytics.

SAS has three ways of processing and managing large amounts of data. Its high-performance computing (HPC) capabilities are effectively a massively parallel processing (MPP) database, albeit with rich analytic functionality. The main benefit of HPC is scalability; it allows processing of large data sets.

A variation on the HPC configuration includes pushing down operations into third-party databases for “in-database” analytics. Currently, in-database capabilities are available from Teradata and EMC Greenplum, plus SAS has announced plans to integrate with other database technologies. The main benefit of in-database processing is that it minimizes the need to move data out of the database and into the SAS application, which saves time and effort.

More recently, SAS introduced a third alternative that it calls High Performance Analytics (HPA), which provides in-memory processing and can be used with either configuration. The main benefit of in-memory processing is enhanced performance.

These different configurations each have advantages and disadvantages, but having multiple alternatives can create confusion about which one to use. As a general rule of thumb, if your analysis involves a relatively small amount of data, perhaps as much as a couple of gigabytes, you can run HPA on a single node. If your system involves larger amounts of data coming from a database on multiple nodes, you will want to install HPA on each node to be able to process the information more quickly and handle internode transfers of data.

SAS also has the ability to work with data in Hadoop. Users can access information in Hadoop via Hive to interact with tables as if they were native SAS data sets. The analytic processing is done in SAS, but this approach eliminates the need to extract the data from Hadoop and put it into SAS. Users can also invoke MapReduce jobs in Hadoop from the SAS environment. To be clear, SAS does not automatically generate these jobs or assist users in creating them, but this does offer a way for SAS users to create a single process that mixes SAS and Hadoop processing.

I’d like to see SAS push down more processing into the Hadoop environment and make more of the Hadoop processing automatic. SAS plans to introduce a new capability later in the year called LASR Analytic Server that is supposed to deliver better integration with Hadoop as well as better integration with the other distributed databases SAS supports, such as EMC Greenplum and Teradata.

There were some other items to note at the event. One is a new product for end-user interactive data visualization called Visual Analytics Explorer, which is scheduled to be introduced during the first quarter of this year. For years SAS was known for having powerful analytics but lackluster user interfaces, so this came as a bit of a surprise, but the initial impression shared by many in attendance was that SAS has done a good job on the design of the user interface for this product.

In the analytics software market, many vendors have introduced products recently that provide interactive visualization. Companies such as QlikView, Tableau and Tibco Spotfire have built their businesses around interactive visualization and data exploration. Within the last year, IBM introduced Cognos Insight, MicroStrategy introduced Visual Insight, and Oracle introduced visualization capabilities in its Exalytics appliance. SAS customers will soon have the option of using an integrated product rather than a third-party product for these visualization capabilities.

Based on SAS CTO Keith Collins’s presentation I expect to see SAS making a big investment in SaaS (software as a service, pun intended) and other cloud offerings, including platform as a service and infrastructure as a service. Collins outlined the company’s OnCloud initiative, which begins with offering some applications on demand and will roll out additional capabilities over the next two years. SAS plans full cloud support for its products, including a self-service subscription portal, developer capabilities and a marketplace for SAS and third-party cloud-based applications and also plans to support public cloud, private cloud and hybrid configurations. Since SAS already offers its products on a subscription basis, the transition to a SaaS offering should be relatively easy from a financial perspective. This move is consistent with the market trends identified in our Business Data in the Cloud benchmark research. We also see other business intelligence vendors such as MicroStrategy and information management vendors such as Informatica adopting similarly broad commitments to cloud-based versions of their products.

Overall, SAS continues to execute well. Its customers should welcome these new developments, particularly the interactive visualization. The big-data strategy is still too SAS-centric, focused primarily on extracting information from Hadoop and other databases. I expect that the upcoming LASR Analytics Server will leverage these underlying MPP environments better. The cloud offerings will make it easier for new customers to evaluate the SAS products. I recommend you keep an eye on these developments at they come to market.

Regards,

David Menninger – VP & Research Director

LinkedIn

LinkedIn Twitter

Twitter Facebook Fan Page

Facebook Fan Page Ventana Research Website

Ventana Research Website