You are currently browsing the category archive for the ‘Supply Chain Performance’ category.

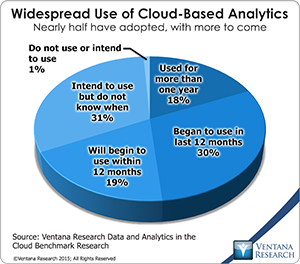

Cloud-based computing has become widespread, particularly in line-of-business applications from vendors such as Salesforce and SuccessFactors. Our benchmark research also suggests a rise in the acceptance of cloud-based analytics. We’ve seen the emergence and growth of cloud-only analytics vendors such as Domo and GoodData as well as cloud-based delivery by nearly all the on-premises analytics vendors. Almost half (48%) of organizations in  our benchmark research on data and analytics in the cloud are using cloud-based analytics today, and two-thirds said they expect to be using cloud-based analytics within 12 months. In fact, only 1 percent said they do not intend to use cloud-based analytics at some point. This popularity leads to the question of how to maximize the value of investments in cloud-based analytics. We assert that one of the most important best practices for cloud-based analytics is to empower business users with modern analytics tools they can work with without relying on IT.

our benchmark research on data and analytics in the cloud are using cloud-based analytics today, and two-thirds said they expect to be using cloud-based analytics within 12 months. In fact, only 1 percent said they do not intend to use cloud-based analytics at some point. This popularity leads to the question of how to maximize the value of investments in cloud-based analytics. We assert that one of the most important best practices for cloud-based analytics is to empower business users with modern analytics tools they can work with without relying on IT.

Part of the premise of cloud computing in general is to reduce reliance on in-house IT. Line-of-business groups are drawn to the cloud because it enables them to concentrate on the business at hand. They don’t have to wait for IT to set up systems and often can purchase cloud-based services without a capital requisition process. Not only do users want this independence, but cloud-based systems benefit IT, too, by reducing the administrative burden – there’s no need to acquire, install and configure hardware and the associated software. They also help reduce ongoing maintenance since some of that is the responsibility of the cloud application provider.

Cloud-based analytics have benefits that go beyond reducing the administration burden. Organizations in our research most often ranked first or second improved communication and knowledge sharing (39%), improved efficiency in business processes (35%) and decreased time to market (24%). In the context of cloud-based applications of any type, these findings should not come as a surprise. These systems enable access to data from any device in any location. Ready access to information should improve communication, efficiency and consistency. Workers can review and share information as they are performing their jobs in the field, on the shop floor, in the warehouse or when meeting with customers. In addition, more than half (52%) of organizations reported improved data quality and data management as a benefit.

For these and other reasons users want to be self-sufficient. Usability is consistently the most important software evaluation criterion in our various benchmark research studies.  In the data and analytics in the cloud research, usability was the highest-ranked of seven evaluation criterion: Almost two-thirds (63%) of participants said it is very important.

In the data and analytics in the cloud research, usability was the highest-ranked of seven evaluation criterion: Almost two-thirds (63%) of participants said it is very important.

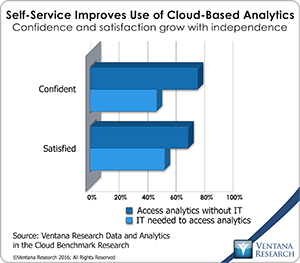

However, the research also finds that most users do not access their cloud-based analytics without the help of IT. Only 40 percent said they are able to analyze their data by themselves. Is this important? If we look at the results organizations are able to achieve, the answer is yes. Those that operate without IT are both more confident (77% vs. 44%) and more often satisfied (71% vs. 55%) in their ability to use cloud-based analytics than those that do not.

As our research shows, the advent of cloud-based analytics is here. Empowering business users makes it possible to improve business outcomes. The IT organization will be free to focus its attention on critical issues only it can address. Thus modern tools for cloud-based analytics can benefit both the lines of business and IT.

Regards,

David Menninger

SVP & Research Director

Follow Me on Twitter @dmenningerVR and Connect with me on LinkedIn.

The emerging Internet of Things (IoT) is an extension of digital connectivity to devices and sensors in homes, businesses, vehicles and potentially almost anywhere. This innovation means that virtually any device can generate and transmit data about its operations – data to which analytics can be applied to facilitate monitoring and a range of automatic functions. To do these tasks IoT requires what Ventana Research calls operational intelligence (OI), a discipline that has evolved from the capture and analysis of instrumentation, networking and machine-to-machine interactions of many types. We define operational intelligence as a set of event-centered information and analytic processes operating across an organization that enable people to use that event information to take effective actions and make optimal decisions. Ventana Research first began covering operational intelligence over a decade ago.

In many industries, organizations can gain competitive advantage if they reduce the elapsed time between an event occurring and actions taken or decisions made in response to it. Existing business intelligence (BI) tools provide useful analysis of and reporting on data drawn from previously recorded transactions, but to improve competitiveness and maximize efficiencies organizations are concluding that employees and processes in IT, business operations and front-line customer sales, service and support also need to be able to detect and respond to events as they happen.

Both business objectives and regulations are driving demand for new operational intelligence technology and practices. By using them many activities can be managed better, among them manufacturing, customer engagement processes, algorithmic trading, dynamic pricing, yield management, risk management, security, fraud detection, surveillance, supply chain and call center optimization, online commerce and gaming. Success in efforts to combat money laundering, terrorism or other criminal behavior also depends on reducing information latency through the application of new techniques.

The evolution of operational intelligence, especially in conjunction with IoT, is encouraging companies to revisit their priorities and spending for information technology and application management. However, sorting out the range of options poses a challenge for both business and IT leaders. Some see potential value in expanding their network infrastructure to support OI. Others are implementing event processing (EP) systems that employ new technology to detect meaningful patterns, anomalies and relationships among events. Increasingly, organizations are using dashboards, visualization and modeling to notify nontechnical people of events and enable them to understand their significance and take appropriate and immediate action.

As with any innovation, using OI for IoT may require substantial changes to organizations. These are among the challenges they face as they consider adopting this evolving operational intelligence:

- They find it difficult to evaluate the business value of enabling real-time sensing of data and event streams using radio frequency identification (RFID) tags, agents and other systems embedded not only in

physical locations like warehouses but also in business processes, networks, mobile devices, data appliances and other technologies.

physical locations like warehouses but also in business processes, networks, mobile devices, data appliances and other technologies. - They lack an IT architecture that can support and integrate these systems as the volume, variety and frequency of information increase. In addition, our previous operational intelligence research shows that these data sources are incomplete or inadequate in nearly two out of five organizations.

- They are uncertain how to set reasonable business and IT expectations, priorities and implementation plans for important technologies that may conflict or overlap. These can include BI, event processing, business process management, rules management, network upgrades, and new or modified applications and databases.

- They don’t understand how to create a personalized user experience that enables nontechnical employees in different roles to monitor data or event streams, identify significant changes, quickly understand the correlation between events and develop a context adequate to enable determining the right decisions or actions to take.

Today’s fast-paced, 24-by-7 world has forced organizations to reduce the latency between when transactions and other data are recorded and when applications and BI systems are made aware of them and thus can take action. Furthermore, the introduction of low-cost sensors and the instrumentation of devices ranging from appliances and airline engines to crop management and animal feeding systems creates opportunities that have never before existed. Technological developments such as smart utility meters, RFID and embedded computing devices for environmental monitoring, surveillance and other tasks also are creating demand for tools that can provide insights in real time from continuous streams of event data.

As organizations expand business intelligence to serve operational needs by deploying dashboards and other portals, they are recognizing the need to implement technology and develop practices that collect events, correlate them into meaningful patterns and use workflow, rules and analytics to guide how employees and automated processes should react. In financial services, online commerce and other industries, for example, some organizations have built proprietary systems or have gone offshore to employ large teams of technicians at outsourcing providers to monitor transactions and event streams for specific patterns and anomalies. To reduce the cost, complexity and imperfections in these procedures, organizations now are seeking technology that can standardize and automate event processing and notify appropriate personnel of significant events in real time.

Conventional database systems are geared to manage discrete sets of data for standard BI queries, but event streams from sources such as sensing devices typically are continuous, and their analysis requires tools designed to enable users to understand causality, patterns, time relationships and other factors. These requirements have led to innovation in event stream processing, event modeling, visualization and analytics. More recently the advent of open source and Hadoop-related big data technologies such as Flume, Kafka, Spark and Storm are enabling a new foundation for operational intelligence. Innovation in the past few years has occurred in both the open source community and proprietary implementations.

Many of the early adopters of operational intelligence technologies were in financial services and intelligence, online services and security. However, as organizations across a range of other industries seek new competitive advantages from information or require real-time insight for risk management and regulatory compliance, demand is increasing broadly for OI technologies. Organizations are considering how to incorporate event-driven architectures, monitor network activity for significant event patterns and bring event notification and insight to users through both existing and new dashboards and portals.

To help understand how organizations are tackling these changes Ventana Research is conducting benchmark research on The Internet of Things and Operational Intelligence. The research will explore how organizations are aligning themselves to take advantage of trends in operational intelligence and IoT. Such alignment involves not just information and technology, but people and  processes as well. For instance, IoT can have a major impact on business processes, but only if organizations can realign IT systems to a discover-and-adapt rather than a model-and-apply paradigm. For instance, business processes are often outlined in PDF documents or through business process systems. However, these processes are often carried out in an uneven fashion different from the way the model was conceived. As more process flows are directly instrumented and some processes carried out by machines, the ability to model directly based on the discovery of those event flows and to adapt to them (either through human learning or machine learning) becomes key to successful organizational processes.

processes as well. For instance, IoT can have a major impact on business processes, but only if organizations can realign IT systems to a discover-and-adapt rather than a model-and-apply paradigm. For instance, business processes are often outlined in PDF documents or through business process systems. However, these processes are often carried out in an uneven fashion different from the way the model was conceived. As more process flows are directly instrumented and some processes carried out by machines, the ability to model directly based on the discovery of those event flows and to adapt to them (either through human learning or machine learning) becomes key to successful organizational processes.

By determining how organizations are addressing the challenges of implementing these technologies and aligning them with business priorities, this research will explore a number of key issues, the following among them:

- What is the nature of the evolving market opportunity? What industries and LOBs are most likely to adopt OI for IoT?

- What is the current thinking of business and IT management about the potential of improving processes, practices and people resources through implementation of these technologies?

- How far along are organizations in articulating operational intelligence and IoT objectives and implementing technologies, including event processing?

- Compared to IT management, what influence do various business functions, including finance and operations management, have on the process of acquiring and deploying these event-centered technologies?

- What suppliers are organizations evaluating to support operational intelligence and IoT, including for complex event processing, event modeling, visualization, activity monitoring, and workflow, process and rules management?

- Who are the key decision-makers and influencers within organizations?

Please join us in this research. Fill out the survey to share your organization’s existing and planned investments in the Internet of Things and operational intelligence. Watch this space for a report of the findings when the research is completed.

Regards,

David Menninger

SVP & Research Director

LinkedIn

LinkedIn Twitter

Twitter Facebook Fan Page

Facebook Fan Page Ventana Research Website

Ventana Research Website